Display glacier area and thickness changes on a grid#

from oggm import cfg

from oggm import tasks, utils, workflow, graphics, DEFAULT_BASE_URL

import salem

import xarray as xr

import numpy as np

import glob

import os

import matplotlib.pyplot as plt

This notebook proposes a method for redistributing glacier ice that has been simulated along the flowline after a glacier retreat simulation. Extrapolating the glacier ice onto a map involves certain assumptions and trade-offs. Depending on the purpose, different choices may be preferred. For example, higher resolution may be desired for visualization compared to using the output for a hydrological model. It is possible to add different options to the final function to allow users to select the option that best suits their needs.

This notebook demonstrates the redistribution process using a single glacier. Its purpose is to initiate further discussion before incorporating it into the main OGGM code base (currently, it is in the sandbox).

Pick a glacier#

# Initialize OGGM and set up the default run parameters

cfg.initialize(logging_level='WARNING')

# Local working directory (where OGGM will write its output)

# WORKING_DIR = utils.gettempdir('OGGM_distr4')

cfg.PATHS['working_dir'] = utils.get_temp_dir('OGGM_distributed', reset=True)

2025-06-05 12:39:25: oggm.cfg: Reading default parameters from the OGGM `params.cfg` configuration file.

2025-06-05 12:39:25: oggm.cfg: Multiprocessing switched OFF according to the parameter file.

2025-06-05 12:39:25: oggm.cfg: Multiprocessing: using all available processors (N=4)

rgi_ids = ['RGI60-11.01450', # Aletsch

'RGI60-11.01478'] # Fieschergletscher

gdirs = workflow.init_glacier_directories(rgi_ids, prepro_base_url=DEFAULT_BASE_URL, from_prepro_level=4, prepro_border=80)

2025-06-05 12:39:26: oggm.workflow: init_glacier_directories from prepro level 4 on 2 glaciers.

2025-06-05 12:39:26: oggm.workflow: Execute entity tasks [gdir_from_prepro] on 2 glaciers

Experiment: a random warming simulation#

Here we use a random climate, but you can use any GCM, as long as glaciers are getting smaller, not bigger!

# Do a random run with a bit of warming

workflow.execute_entity_task(

tasks.run_random_climate,

gdirs,

ys=2020, ye=2100, # Although the simulation is idealised, lets use real dates for the animation

y0=2009, halfsize=10, # Random climate of 1999-2019

seed=1, # Random number generator seed

temperature_bias=1.5, # additional warming - change for other scenarios

store_fl_diagnostics=True, # important! This will be needed for the redistribution

init_model_filesuffix='_spinup_historical', # start from the spinup run

output_filesuffix='_random_s1', # optional - here I just want to make things explicit as to which run we are using afterwards

);

2025-06-05 12:39:26: oggm.workflow: Execute entity tasks [run_random_climate] on 2 glaciers

Redistribute: preprocessing#

The required tasks can be found in the distribute_2d module of the sandbox:

from oggm.sandbox import distribute_2d

# This is to add a new topography to the file (smoothed differently)

workflow.execute_entity_task(distribute_2d.add_smoothed_glacier_topo, gdirs)

# This is to get the bed map at the start of the simulation

workflow.execute_entity_task(tasks.distribute_thickness_per_altitude, gdirs)

# This is to prepare the glacier directory for the interpolation (needs to be done only once)

workflow.execute_entity_task(distribute_2d.assign_points_to_band, gdirs);

2025-06-05 12:39:27: oggm.workflow: Execute entity tasks [add_smoothed_glacier_topo] on 2 glaciers

2025-06-05 12:39:27: oggm.workflow: Execute entity tasks [distribute_thickness_per_altitude] on 2 glaciers

2025-06-05 12:39:29: oggm.workflow: Execute entity tasks [assign_points_to_band] on 2 glaciers

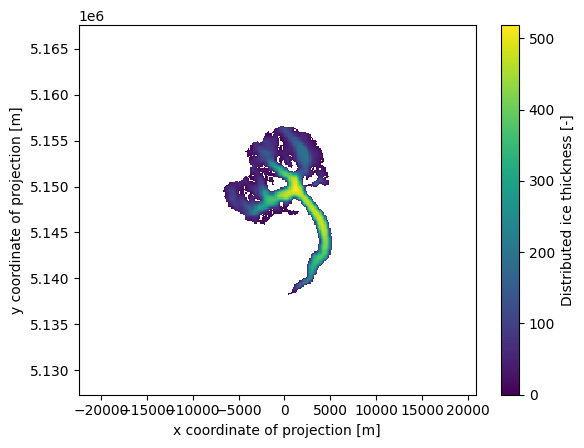

Let’s have a look at what we just did:

gdir = gdirs[0] # here for Aletsch

#gdir = gdirs[1] # uncomment for Fieschergletscher

with xr.open_dataset(gdir.get_filepath('gridded_data')) as ds:

ds = ds.load()

# Inititial glacier thickness

f, ax = plt.subplots()

ds.distributed_thickness.plot(ax=ax);

ax.axis('equal');

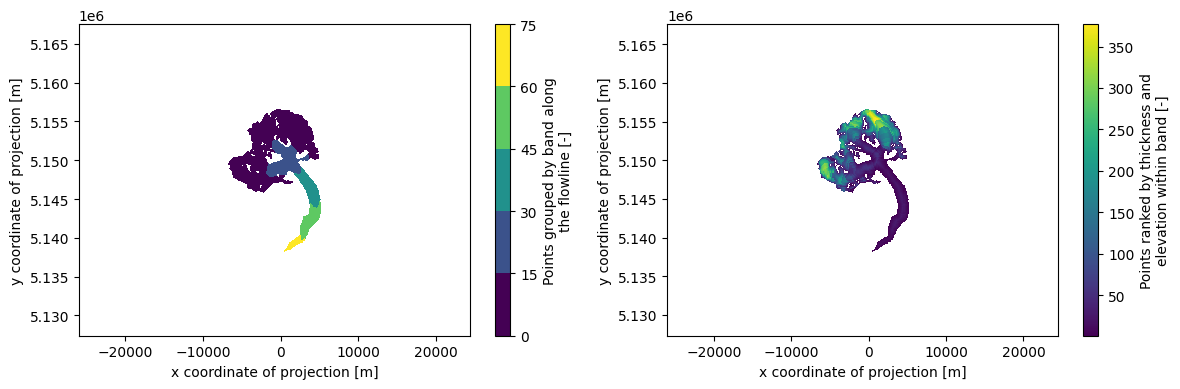

# Which points belongs to which band, and then within one band which are the first to melt

f, (ax1, ax2) = plt.subplots(1, 2, figsize=(12, 4))

ds.band_index.plot.contourf(ax=ax1);

ds.rank_per_band.plot(ax=ax2);

ax1.axis('equal'); ax2.axis('equal'); plt.tight_layout();

Redistribute simulation#

The tasks above need to be run only once. The next one however should be done for each simulation:

ds = workflow.execute_entity_task(

distribute_2d.distribute_thickness_from_simulation,

gdirs,

input_filesuffix='_random_s1', # Use the simulation we just did

concat_input_filesuffix='_spinup_historical', # Concatenate with the historical spinup

output_filesuffix='', # filesuffix added to the output filename gridded_simulation.nc, if empty input_filesuffix is used

)

2025-06-05 12:39:29: oggm.workflow: Execute entity tasks [distribute_thickness_from_simulation] on 2 glaciers

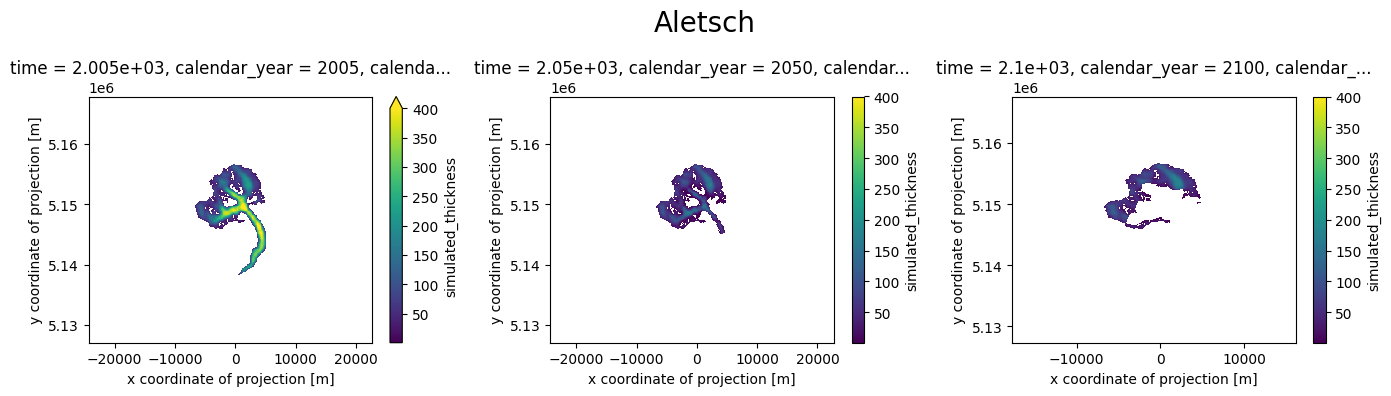

Plot#

Let’s have a look!

# # This below is only to open the file again later if needed

# with xr.open_dataset(gdir.get_filepath('gridded_simulation', filesuffix='_random_s1')) as ds:

# ds = ds.load()

def plot_distributed_thickness(ds, title):

f, (ax1, ax2, ax3) = plt.subplots(1, 3, figsize=(14, 4))

ds.simulated_thickness.sel(time=2005).plot(ax=ax1, vmax=400);

ds.simulated_thickness.sel(time=2050).plot(ax=ax2, vmax=400);

ds.simulated_thickness.sel(time=2100).plot(ax=ax3, vmax=400);

ax1.axis('equal'); ax2.axis('equal'); f.suptitle(title, fontsize=20);

plt.tight_layout();

plot_distributed_thickness(ds[0], 'Aletsch')

# plot_distributed_thickness(ds[1], 'Fieschergletscher')

Note: the simulation before the RGI date cannot be trusted - it is the result of the dynamical spinup. Furthermore, if the area is larger than the RGI glacier, the redistribution algorithm will put all mass in the glacier mask, which is not what we want:

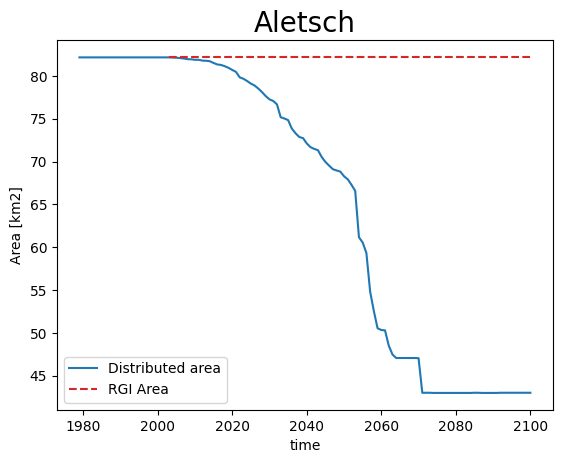

def plot_area(ds, gdir, title):

area = (ds.simulated_thickness > 0).sum(dim=['x', 'y']) * gdir.grid.dx**2 * 1e-6

area.plot(label='Distributed area');

plt.hlines(gdir.rgi_area_km2, gdir.rgi_date, 2100, color='C3', linestyles='--', label='RGI Area');

plt.legend(loc='lower left'); plt.ylabel('Area [km2]'); plt.title(title, fontsize=20); plt.show();

plot_area(ds[0], gdirs[0], 'Aletsch')

# plot_area(ds[1], gdirs[1], 'Fieschergletscher')

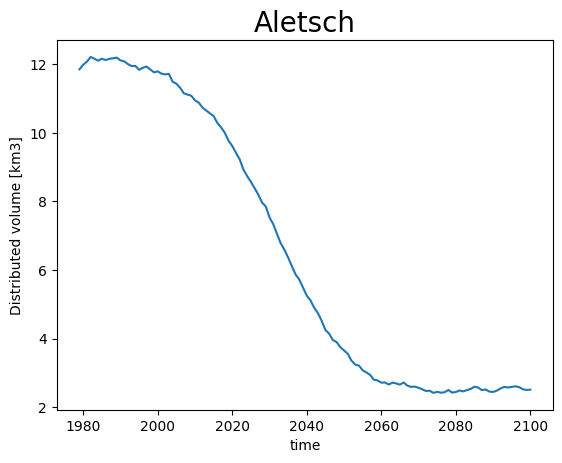

Volume however is conserved:

def plot_volume(ds, gdir, title):

vol = ds.simulated_thickness.sum(dim=['x', 'y']) * gdir.grid.dx**2 * 1e-9

vol.plot(label='Distributed volume'); plt.ylabel('Distributed volume [km3]');

plt.title(title, fontsize=20); plt.show();

plot_volume(ds[0], gdirs[0], 'Aletsch')

# plot_volume(ds[1], gdirs[1], 'Fieschergletscher')

Therefore, lets just keep all data after the RGI year only:

for i, (ds_single, gdir) in enumerate(zip(ds, gdirs)):

ds[i] = ds_single.sel(time=slice(gdir.rgi_date, None))

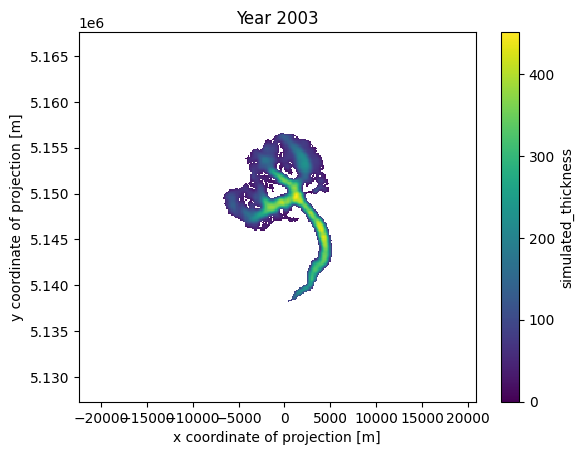

Animation!#

from matplotlib import animation

from IPython.display import HTML, display

# Get a handle on the figure and the axes

fig, ax = plt.subplots()

thk = ds[0]['simulated_thickness'] # Aletsch

# thk = ds[1]['simulated_thickness'] # Fieschergletscher

# Plot the initial frame.

cax = thk.isel(time=0).plot(ax=ax,

add_colorbar=True,

cmap='viridis',

vmin=0, vmax=thk.max(),

cbar_kwargs={

'extend':'neither'

}

)

ax.axis('equal')

def animate(frame):

ax.set_title(f'Year {int(thk.time[frame])}')

cax.set_array(thk.values[frame, :].flatten())

ani_glacier = animation.FuncAnimation(fig, animate, frames=len(thk.time), interval=100);

HTML(ani_glacier.to_jshtml())

# Write to mp4?

# FFwriter = animation.FFMpegWriter(fps=10)

# ani2.save('animation.mp4', writer=FFwriter)

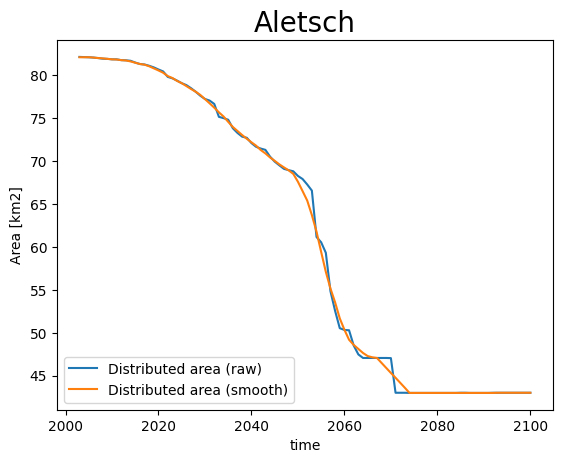

Finetune the visualisation#

When applying the tools you might see that sometimes the timeseries are “shaky”, or have sudden changes in area. This comes from a few reasons:

OGGM does not distinguish between ice and snow, i.e. when it snows a lot sometimes OGGM thinks there is a glacier for a couple of years.

the trapezoidal flowlines result in sudden (“step”) area changes when they melt entirely.

on small glaciers, the changes within one year can be big, and you may want to have more intermediate frames

We implement some workarounds for these situations:

ds_smooth = workflow.execute_entity_task(

distribute_2d.distribute_thickness_from_simulation,

gdirs,

input_filesuffix='_random_s1',

concat_input_filesuffix='_spinup_historical',

ys=2003, ye=2100, # make the output smaller

output_filesuffix='_random_s1_smooth', # do not overwrite the previous file (optional)

# add_monthly=True, # more frames! (12 times more - we comment for the demo, but recommend it)

rolling_mean_smoothing=7, # smooth the area time series

fl_thickness_threshold=1, # avoid snow patches to be nisclassified

)

2025-06-05 12:39:46: oggm.workflow: Execute entity tasks [distribute_thickness_from_simulation] on 2 glaciers

def plot_area_smoothed(ds_smooth, ds, gdir, title):

area = (ds.simulated_thickness > 0).sum(dim=['x', 'y']) * gdir.grid.dx**2 * 1e-6

area.plot(label='Distributed area (raw)');

area = (ds_smooth.simulated_thickness > 0).sum(dim=['x', 'y']) * gdir.grid.dx**2 * 1e-6

area.plot(label='Distributed area (smooth)');

plt.legend(loc='lower left'); plt.ylabel('Area [km2]');

plt.title(title, fontsize=20); plt.show();

plot_area_smoothed(ds_smooth[0], ds[0], gdirs[0], 'Aletsch')

# plot_area_smoothed(ds_smooth[1], ds[1], gdirs[1], 'Fieschergletscher')

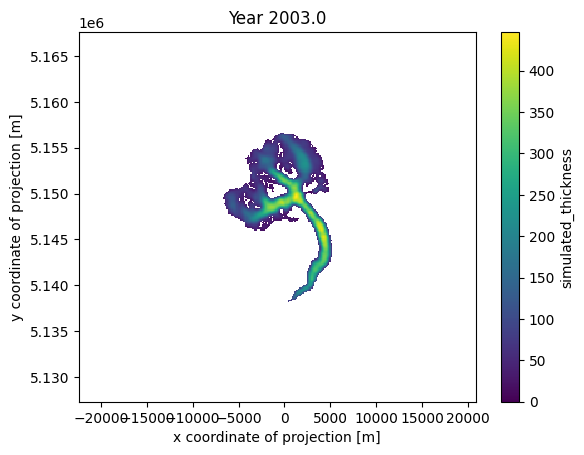

# Get a handle on the figure and the axes

fig, ax = plt.subplots()

thk = ds_smooth[0]['simulated_thickness'] # Aletsch

# thk = ds_smooth[1]['simulated_thickness'] # Fieschergletscher

# Plot the initial frame.

cax = thk.isel(time=0).plot(ax=ax,

add_colorbar=True,

cmap='viridis',

vmin=0, vmax=thk.max(),

cbar_kwargs={

'extend':'neither'

}

)

ax.axis('equal')

def animate(frame):

ax.set_title(f'Year {float(thk.time[frame]):.1f}')

cax.set_array(thk.values[frame, :].flatten())

ani_glacier = animation.FuncAnimation(fig, animate, frames=len(thk.time), interval=100);

# Visualize

HTML(ani_glacier.to_jshtml())

# Write to mp4?

# FFwriter = animation.FFMpegWriter(fps=120)

# ani2.save('animation_smooth.mp4', writer=FFwriter)

Merge redistributed thickness from multiple glaciers#

If you’re working in a region with multiple glaciers, displaying the evolution of all glaciers simultaneously can be convenient. To facilitate this, we offer a tool that merges the simulated distributed thicknesses of multiple glaciers.

This process can be very memory-intensive. To prevent memory overflow issues, we, by default, merge the data for each year into a separate file. If you have sufficient resources, you can set save_as_multiple_files=False to compile the data into a single file at the end. However, with xarray.open_mfdataset, you have the capability to seamlessly open and work with these multiple files as if they were one.

For further explanation on merging gridded_data, please consult the tutorial Ingest gridded products such as ice velocity into OGGM.

simulation_filesuffix = '_random_s1_smooth' # saved in variable for later opening of the files

distribute_2d.merge_simulated_thickness(

gdirs, # the gdirs we want to merge

simulation_filesuffix=simulation_filesuffix, # the name of the simulation

years_to_merge=np.arange(2005, 2101, 5), # for demonstration I only pick some years, if this is None all years are merged

add_topography=True, # if you do not need topogrpahy setting this to False will decrease computing time

preserve_totals=True, # preserve individual glacier volumes during merging

reset=True,

)

2025-06-05 12:40:02: oggm.sandbox.distribute_2d: Applying global task merge_simulated_thickness on 2 glaciers

2025-06-05 12:40:02: oggm.workflow: Applying global task merge_gridded_data on 2 glaciers

---------------------------------------------------------------------------

ModuleNotFoundError Traceback (most recent call last)

File /usr/local/pyenv/versions/3.11.11/lib/python3.11/site-packages/oggm/utils/_downloads.py:543, in _progress_urlretrieve(url, cache_name, reset, auth, timeout)

542 try:

--> 543 from progressbar import DataTransferBar, UnknownLength

544 pbar = None

ModuleNotFoundError: No module named 'progressbar'

During handling of the above exception, another exception occurred:

HttpDownloadError Traceback (most recent call last)

Cell In[24], line 2

1 simulation_filesuffix = '_random_s1_smooth' # saved in variable for later opening of the files

----> 2 distribute_2d.merge_simulated_thickness(

3 gdirs, # the gdirs we want to merge

4 simulation_filesuffix=simulation_filesuffix, # the name of the simulation

5 years_to_merge=np.arange(2005, 2101, 5), # for demonstration I only pick some years, if this is None all years are merged

6 add_topography=True, # if you do not need topogrpahy setting this to False will decrease computing time

7 preserve_totals=True, # preserve individual glacier volumes during merging

8 reset=True,

9 )

File /usr/local/pyenv/versions/3.11.11/lib/python3.11/site-packages/oggm/utils/_workflow.py:557, in global_task.__call__.<locals>._global_task(gdirs, **kwargs)

553 self.log.workflow('Applying global task %s on %s glaciers',

554 task_func.__name__, len(gdirs))

556 # Run the task

--> 557 return task_func(gdirs, **kwargs)

File /usr/local/pyenv/versions/3.11.11/lib/python3.11/site-packages/oggm/sandbox/distribute_2d.py:558, in merge_simulated_thickness(gdirs, output_folder, output_filename, simulation_filesuffix, years_to_merge, keep_dem_file, interp, preserve_totals, smooth_radius, use_glacier_mask, add_topography, use_multiprocessing, save_as_multiple_files, reset)

554 ds.to_netcdf(fp)

556 if save_as_multiple_files:

557 # first the _topo_data file

--> 558 workflow.merge_gridded_data(

559 gdirs,

560 output_folder=output_folder,

561 output_filename=f"{output_filename}_topo_data",

562 input_file='gridded_data',

563 input_filesuffix='',

564 included_variables=['glacier_ext',

565 'glacier_mask',

566 'distributed_thickness',

567 ],

568 preserve_totals=preserve_totals,

569 smooth_radius=smooth_radius,

570 use_glacier_mask=use_glacier_mask,

571 add_topography=add_topography,

572 keep_dem_file=keep_dem_file,

573 interp=interp,

574 use_multiprocessing=use_multiprocessing,

575 return_dataset=False,

576 reset=reset)

578 # recalculate bed topography after reprojection, if topo was added

579 if add_topography:

File /usr/local/pyenv/versions/3.11.11/lib/python3.11/site-packages/oggm/utils/_workflow.py:557, in global_task.__call__.<locals>._global_task(gdirs, **kwargs)

553 self.log.workflow('Applying global task %s on %s glaciers',

554 task_func.__name__, len(gdirs))

556 # Run the task

--> 557 return task_func(gdirs, **kwargs)

File /usr/local/pyenv/versions/3.11.11/lib/python3.11/site-packages/oggm/workflow.py:985, in merge_gridded_data(gdirs, output_folder, output_filename, input_file, input_filesuffix, included_variables, preserve_totals, smooth_radius, use_glacier_mask, add_topography, keep_dem_file, interp, use_multiprocessing, return_dataset, reset)

983 dem_source = None

984 dem_gdir = gdirs[0]

--> 985 gis.get_dem_for_grid(combined_grid, output_folder,

986 source=dem_source, gdir=dem_gdir)

987 # unwrapped is needed to execute process_dem without the entity_task

988 # overhead (this would need a valid gdir)

989 gis.process_dem.unwrapped(gdir=None, grid=combined_grid,

990 fpath=output_folder,

991 output_filename=output_filename)

File /usr/local/pyenv/versions/3.11.11/lib/python3.11/site-packages/oggm/core/gis.py:482, in get_dem_for_grid(grid, fpath, source, gdir)

471 grid_prop = {

472 'utm_proj': grid.proj,

473 'dx': grid.dx,

(...) 477 'ny': grid.ny

478 }

480 source = check_dem_source(source, extent_ll, rgi_id=rgi_id)

--> 482 dem_list, dem_source = get_topo_file((minlon, maxlon), (minlat, maxlat),

483 gdir=gdir,

484 dx_meter=grid_prop['dx'],

485 source=source)

487 if rgi_id is not None:

488 log.debug('(%s) DEM source: %s', rgi_id, dem_source)

File /usr/local/pyenv/versions/3.11.11/lib/python3.11/site-packages/oggm/utils/_downloads.py:2492, in get_topo_file(lon_ex, lat_ex, gdir, dx_meter, zoom, source)

2490 zones = nasadem_zone(lon_ex, lat_ex)

2491 for z in zones:

-> 2492 files.append(_download_nasadem_file(z))

2494 # filter for None (e.g. oceans)

2495 files = [s for s in files if s]

File /usr/local/pyenv/versions/3.11.11/lib/python3.11/site-packages/oggm/utils/_downloads.py:898, in _download_nasadem_file(zone)

896 def _download_nasadem_file(zone):

897 with get_lock():

--> 898 return _download_nasadem_file_unlocked(zone)

File /usr/local/pyenv/versions/3.11.11/lib/python3.11/site-packages/oggm/utils/_downloads.py:918, in _download_nasadem_file_unlocked(zone)

915 return outpath

917 # Did we download it yet?

--> 918 dest_file = file_downloader(wwwfile)

920 # None means we tried hard but we couldn't find it

921 if not dest_file:

File /usr/local/pyenv/versions/3.11.11/lib/python3.11/site-packages/oggm/utils/_downloads.py:611, in file_downloader(www_path, retry_max, sleep_on_retry, cache_name, reset, auth, timeout)

609 try:

610 retry_counter += 1

--> 611 local_path = _progress_urlretrieve(www_path, cache_name=cache_name,

612 reset=reset, auth=auth,

613 timeout=timeout)

614 # if no error, exit

615 break

File /usr/local/pyenv/versions/3.11.11/lib/python3.11/site-packages/oggm/utils/_downloads.py:567, in _progress_urlretrieve(url, cache_name, reset, auth, timeout)

565 return res

566 except ImportError:

--> 567 return oggm_urlretrieve(url, cache_obj_name=cache_name,

568 reset=reset, auth=auth, timeout=timeout)

File /usr/local/pyenv/versions/3.11.11/lib/python3.11/site-packages/oggm/utils/_downloads.py:535, in oggm_urlretrieve(url, cache_obj_name, reset, reporthook, auth, timeout)

531 _classic_urlretrieve(url, cache_path, reporthook, auth,

532 timeout)

533 return cache_path

--> 535 return _verified_download_helper(cache_obj_name, _dlf, reset)

File /usr/local/pyenv/versions/3.11.11/lib/python3.11/site-packages/oggm/utils/_downloads.py:341, in _verified_download_helper(cache_obj_name, dl_func, reset)

334 def _verified_download_helper(cache_obj_name, dl_func, reset=False):

335 """Helper function for downloads.

336

337 Verifies the size and hash of the downloaded file against the included

338 list of known static files.

339 Uses _cached_download_helper to perform the actual download.

340 """

--> 341 path = _cached_download_helper(cache_obj_name, dl_func, reset)

343 dl_verify = cfg.PARAMS.get('dl_verify', False)

345 if dl_verify and path and cache_obj_name not in cfg.DL_VERIFIED:

File /usr/local/pyenv/versions/3.11.11/lib/python3.11/site-packages/oggm/utils/_downloads.py:325, in _cached_download_helper(cache_obj_name, dl_func, reset)

322 mkdir(os.path.dirname(cache_path))

324 try:

--> 325 cache_path = _call_dl_func(dl_func, cache_path)

326 except BaseException:

327 if os.path.exists(cache_path):

File /usr/local/pyenv/versions/3.11.11/lib/python3.11/site-packages/oggm/utils/_downloads.py:267, in _call_dl_func(dl_func, cache_path)

264 def _call_dl_func(dl_func, cache_path):

265 """Helper so the actual call to downloads can be overridden

266 """

--> 267 return dl_func(cache_path)

File /usr/local/pyenv/versions/3.11.11/lib/python3.11/site-packages/oggm/utils/_downloads.py:526, in oggm_urlretrieve.<locals>._dlf(cache_path)

524 logger.info("Downloading %s to %s..." % (url, cache_path))

525 try:

--> 526 _requests_urlretrieve(url, cache_path, reporthook, auth, timeout)

527 except requests.exceptions.InvalidSchema:

528 if 'ftps://' in url:

File /usr/local/pyenv/versions/3.11.11/lib/python3.11/site-packages/oggm/utils/_downloads.py:381, in _requests_urlretrieve(url, path, reporthook, auth, timeout)

379 with requests.get(url, stream=True, auth=auth, timeout=timeout) as r:

380 if r.status_code != 200:

--> 381 raise HttpDownloadError(r.status_code, url)

382 r.raise_for_status()

384 size = r.headers.get('content-length') or -1

HttpDownloadError: (401, 'https://e4ftl01.cr.usgs.gov/MEASURES/NASADEM_HGT.001/2000.02.11/NASADEM_HGT_n46e007.zip')

# by default the resulting files are saved in cfg.PATHS['working_dir']

# with names gridded_simulation_merged{simulation_filesuffix}{yr}.

# To open all at once we first get all corresponding files

files_to_open = glob.glob(

os.path.join(

cfg.PATHS['working_dir'], # the default output_folder

f'gridded_simulation_merged{simulation_filesuffix}*.nc', # with the * we open all files which matches the pattern

)

)

files_to_open

[]

You will notice that there is a file for each year, as well as one file with the suffix _topo_data. As the name suggests, this file contains the topography and gridded-outline information of the merged glaciers, which could be useful for visualizations.

Now we open all files in one dataset with:

with xr.open_mfdataset(files_to_open) as ds_merged:

ds_merged = ds_merged.load()

---------------------------------------------------------------------------

OSError Traceback (most recent call last)

Cell In[26], line 1

----> 1 with xr.open_mfdataset(files_to_open) as ds_merged:

2 ds_merged = ds_merged.load()

File /usr/local/pyenv/versions/3.11.11/lib/python3.11/site-packages/xarray/backends/api.py:1596, in open_mfdataset(paths, chunks, concat_dim, compat, preprocess, engine, data_vars, coords, combine, parallel, join, attrs_file, combine_attrs, **kwargs)

1593 paths = _find_absolute_paths(paths, engine=engine, **kwargs)

1595 if not paths:

-> 1596 raise OSError("no files to open")

1598 paths1d: list[str | ReadBuffer]

1599 if combine == "nested":

OSError: no files to open

ds_merged

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In[27], line 1

----> 1 ds_merged

NameError: name 'ds_merged' is not defined

Now we can look at the result with a plot:

plot_distributed_thickness(ds_merged, 'Aletsch and Fieschergletscher')

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In[28], line 1

----> 1 plot_distributed_thickness(ds_merged, 'Aletsch and Fieschergletscher')

NameError: name 'ds_merged' is not defined

Alternatively, you can create an animation using the merged data, displaying a value every 5 years, as specified above with years_to_merge:

# Get a handle on the figure and the axes

fig, ax = plt.subplots()

thk = ds_merged['simulated_thickness']

# Plot the initial frame.

cax = thk.isel(time=0).plot(ax=ax,

add_colorbar=True,

cmap='viridis',

vmin=0, vmax=thk.max(),

cbar_kwargs={

'extend':'neither'

}

)

ax.axis('equal')

def animate(frame):

ax.set_title(f'Year {float(thk.time[frame]):.1f}')

cax.set_array(thk.values[frame, :].flatten())

ani_glacier = animation.FuncAnimation(fig, animate, frames=len(thk.time), interval=200);

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In[29], line 4

1 # Get a handle on the figure and the axes

2 fig, ax = plt.subplots()

----> 4 thk = ds_merged['simulated_thickness']

6 # Plot the initial frame.

7 cax = thk.isel(time=0).plot(ax=ax,

8 add_colorbar=True,

9 cmap='viridis',

(...) 13 }

14 )

NameError: name 'ds_merged' is not defined

# Visualize

HTML(ani_glacier.to_jshtml())

For visualization purposes, the merged dataset also includes the topography of the entire region, encompassing the glacier surfaces:

cmap=salem.get_cmap('topo')

ds_merged.topo.plot(cmap=cmap)

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In[31], line 2

1 cmap=salem.get_cmap('topo')

----> 2 ds_merged.topo.plot(cmap=cmap)

NameError: name 'ds_merged' is not defined

Or the estimated bedrock topography, without ice:

ds_merged.bedrock.plot(cmap=cmap)

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In[32], line 1

----> 1 ds_merged.bedrock.plot(cmap=cmap)

NameError: name 'ds_merged' is not defined

Nice 3D videos#

We are working on a tool to make even nicer videos like this one:

from IPython.display import Video

Video("../../img/mittelbergferner.mp4", embed=True, width=700)

The WIP tool is available here: OGGM/oggm-3dviz